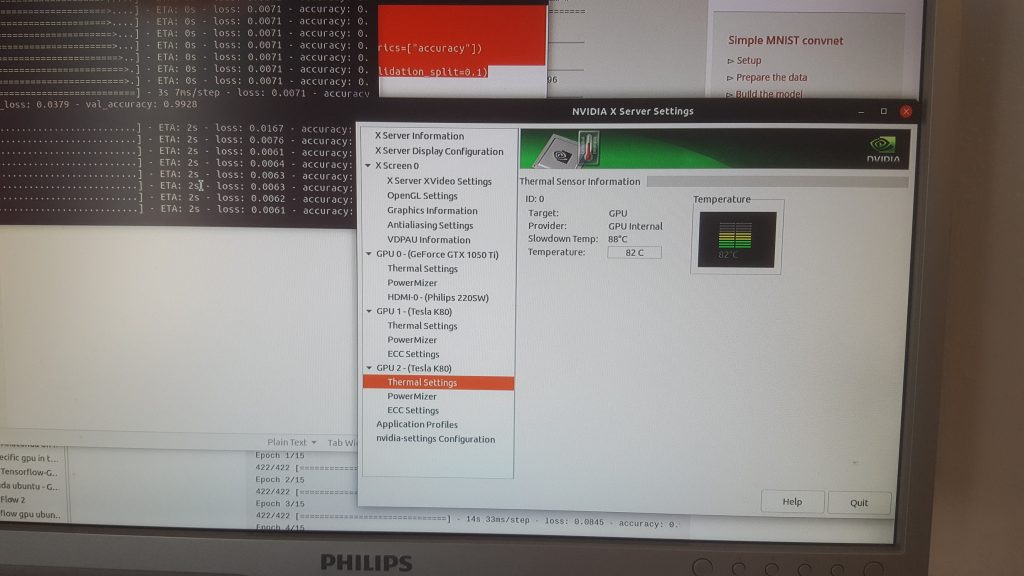

Finally I harnessed Tesla K80 for my AI modeling but unfortunately the overheat brings much overheads and not by all models the GPU is superior over CPU.

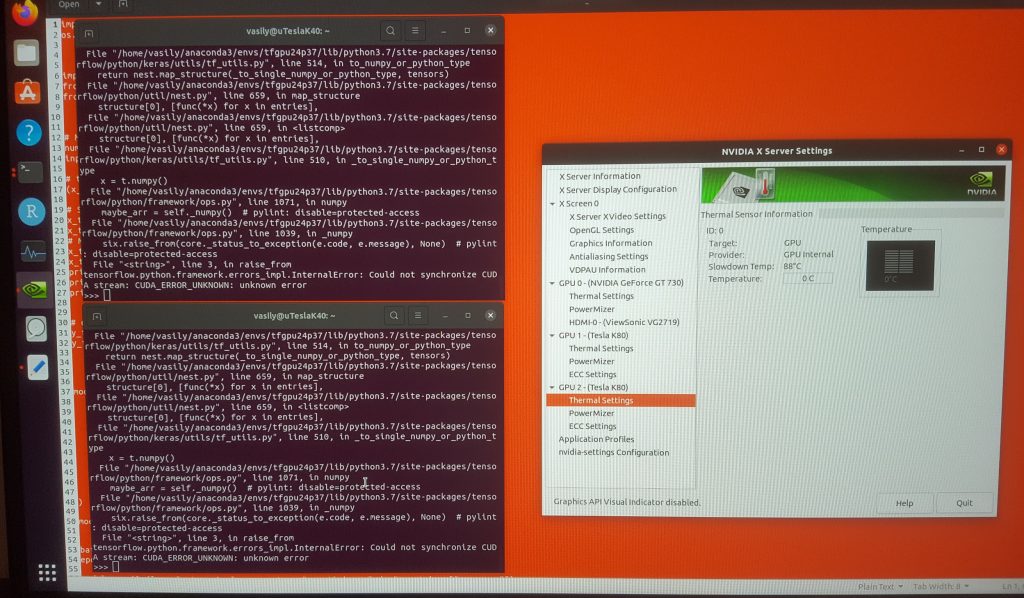

In my previous post I reported that I successfully assembled two servers from a cheap hardware bought on eBay. However, it was not easy to harness the GPUs. Tesla K40, as already said, fails with Could not synchronize CUDA stream: CUDA_ERROR_UNKNOWN: unknown error.

Unlikely it is a flaw of of my particular card since I bought two from different sellers. It is also unlikely a defect of K40 series (otherwise there would be much complaints on web). Most likely it is an incompatibility issue with my TERRA main boards. Unfortunately I cannot check this since all other PCs I have are relatively old and have no PCIx16 v3, which is necessary for Tesla K40.

Anyway, Tesla K80 does not has this problem (as long as you engage only one CPU) but cooling this powerful card is challenging. BTW, the power supply is also a small challenge: you need 4PIN CPU supply (connecting to the Molex 4-Pin for [not so powerful] graphic card is wrong). But let us get back to cooling problem.

First I tried to cool with a vacuum cleaner but that vacuum cleaner sucks (i.e. had a bad power of suction). To blame are the EU-Politicians that limited the maximum allowed vacuum cleaner power first to 1600W and then to 900W (I will not be too surprised if the next law prescribes to use only dusters and brooms).

Then, inspired by this video I decided to do the same.

So open cover

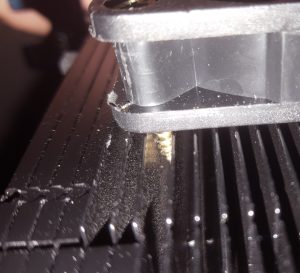

take coolers from this thing (originally intended to cool a HDD)

find proper screws

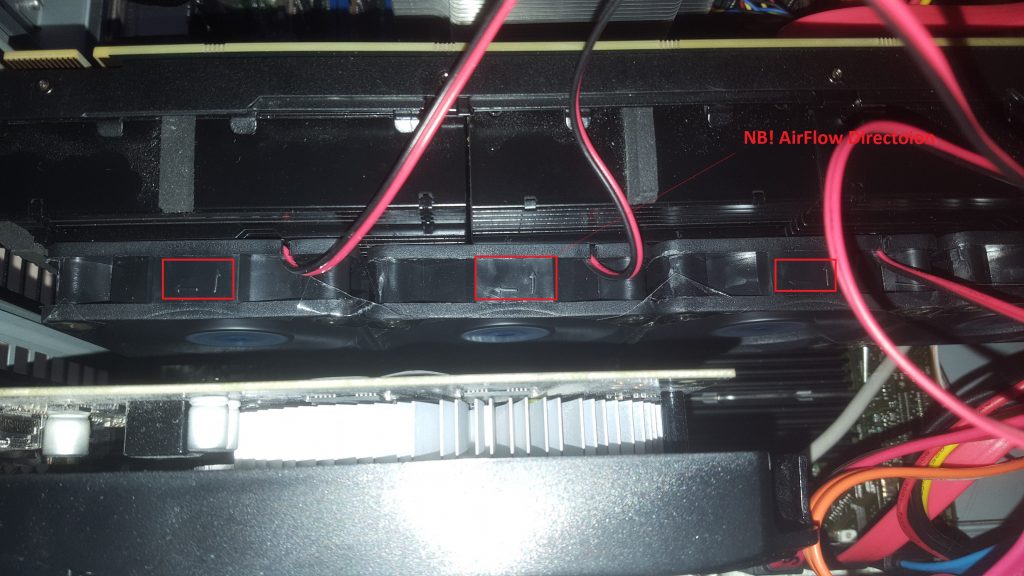

and finally I got it!

Note that covering screws with a scotch tape is a cheap safety measure, the screws are driven not into a hard metal but between cooler gills, so one might fall away because of temperature or vibration. But scotch will not let it fall on another graphic card that is located below: thus the short-circuit danger is (partially) prevented.

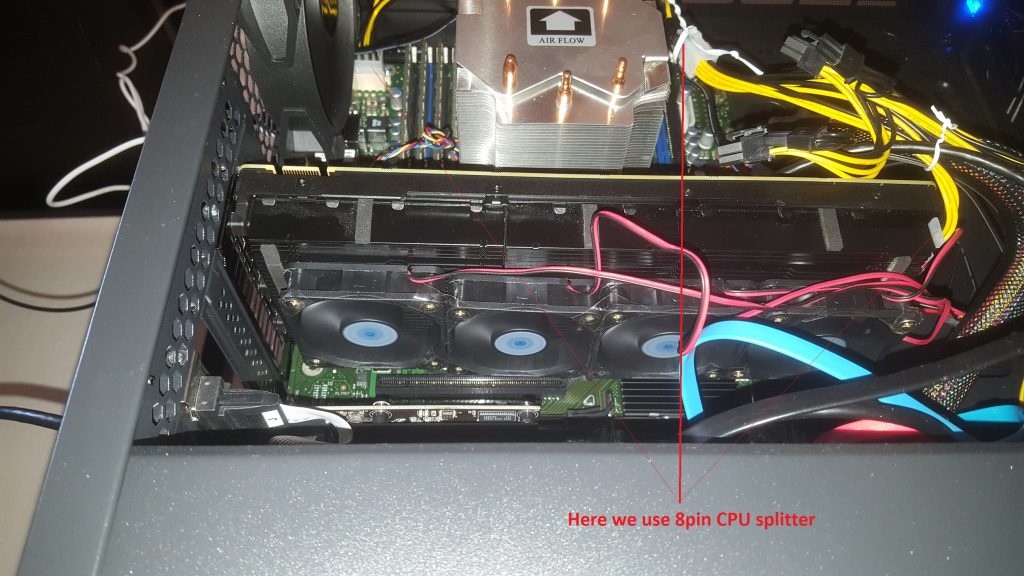

So connect the 2x Molex 4pin to CPU 4pin adapter

and install the card into the ATX tower.

Well, probably it is because the graphic card below heats and the CPU airflow may also warm from above, so let us try the Makashi E-ATX tower.

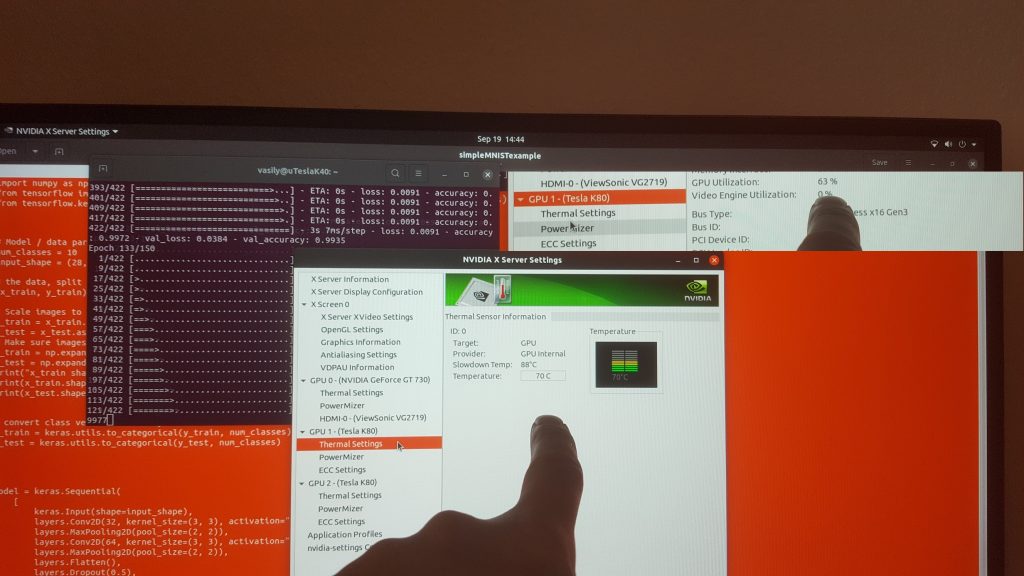

Aha! Much gooder (whereas not perfect): the temperature stabilizes around 70 °C (by GPU utilization of 60%-70%).

But can we engage both GPUs simultaneously?! Well, from heating-cooling point of view we can but after ca. 45th epoch we get again this annoying Could not synchronize CUDA stream: CUDA_ERROR_UNKNOWN: unknown error.

Conculusion: Now I can use Tesla K80 but taking into account all overheads I would say that this GPU is not as cool as it is hot!

On the photos above I used Simple MNIST convert Keras example as a test. Tesla K80 was three times faster than my XEON CPU with 24 cores (interestingly, the CPU was also utilized by ca. 70%). Factor x3 might be worthy... but unfortunately it is not reached on all deep learning architectures. GPUs are good for convolutional and attention layers but for sequential layers (which almost all my stock picking models do have) they are not better (and sometimes even a bit worse). Also the energy saving is (even by German electricity prices) rather marginal: ca. 150W of GPU vs. ca. 200W is not a too big deal.

FinViz - an advanced stock screener (both for technical and fundamental traders)